How to Use Qwen3-Coder and GLM-4.5 for Free Vibe Coding

With the surge of open-source AI large models, how to use Qwen3-Coder and GLM-4.5 for free vibe coding?

Introduction: Vibe Coding

Recently, the term “Vibe Coding” has been gaining popularity in the tech community. Unlike traditional code development workflows, Vibe Coding encourages us to interact with large models using natural language, “speaking out” ideas, letting AI help generate code, test logic, and complete documentation, making the whole development experience extremely easy and free.

But don’t think this is just “mindless fun” — Vibe Coding actually requires strong overall process design, prompt engineering, and tool mastery.

Using commercial models for Vibe Coding involves some costs, which is a significant barrier for newcomers.

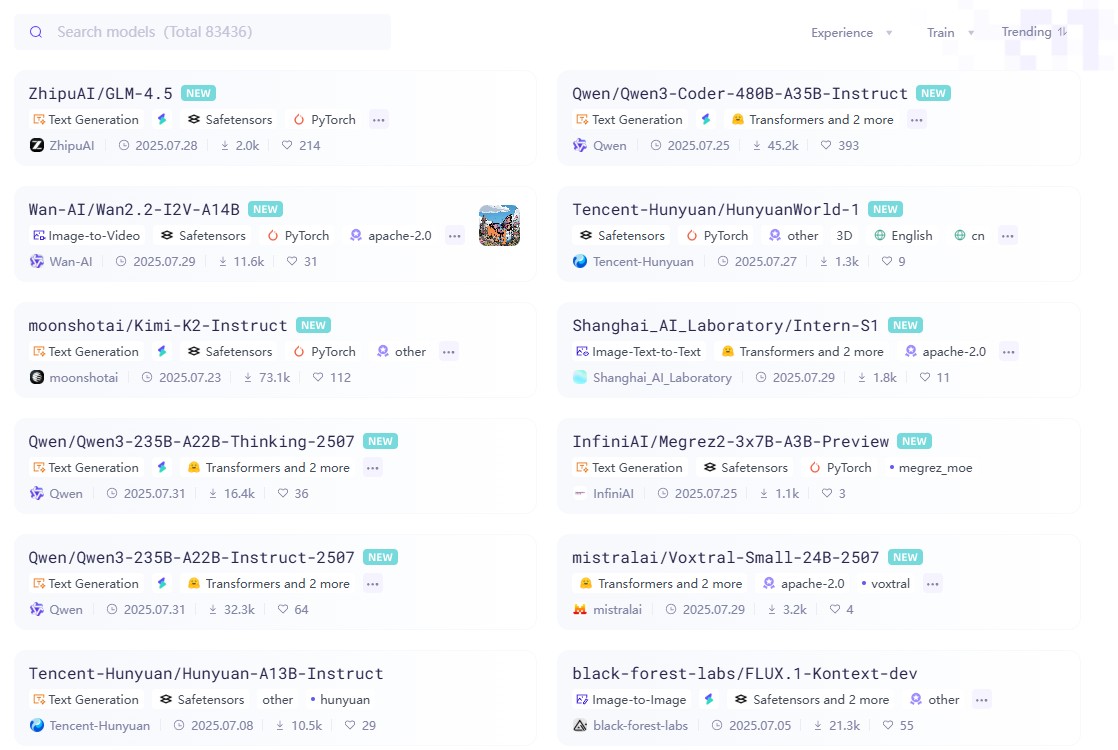

With the release of Qwen3-Coder and GLM-4.5, Chinese open-source large models are beginning to outcompete peers in coding capabilities and cost-effectiveness. Individuals and small teams can experience Vibe Coding with zero barriers through online chat, ModelScope’s free quotas, and VSCode plugins.

Official Chat Platforms

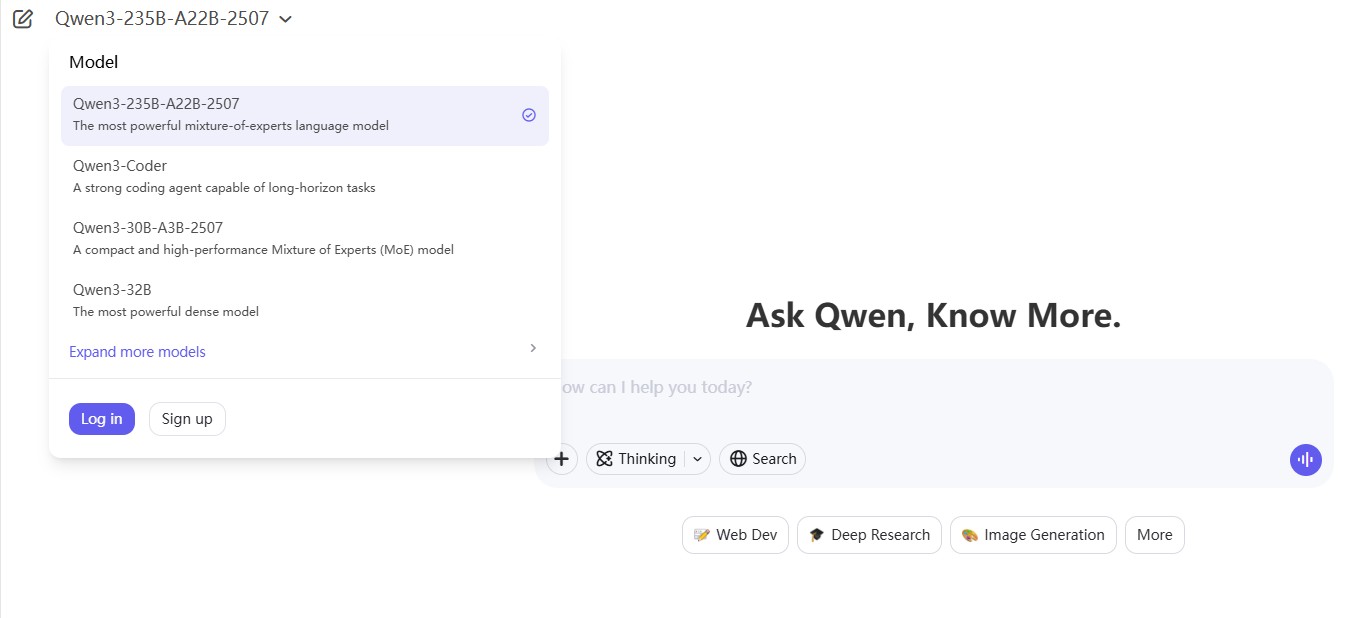

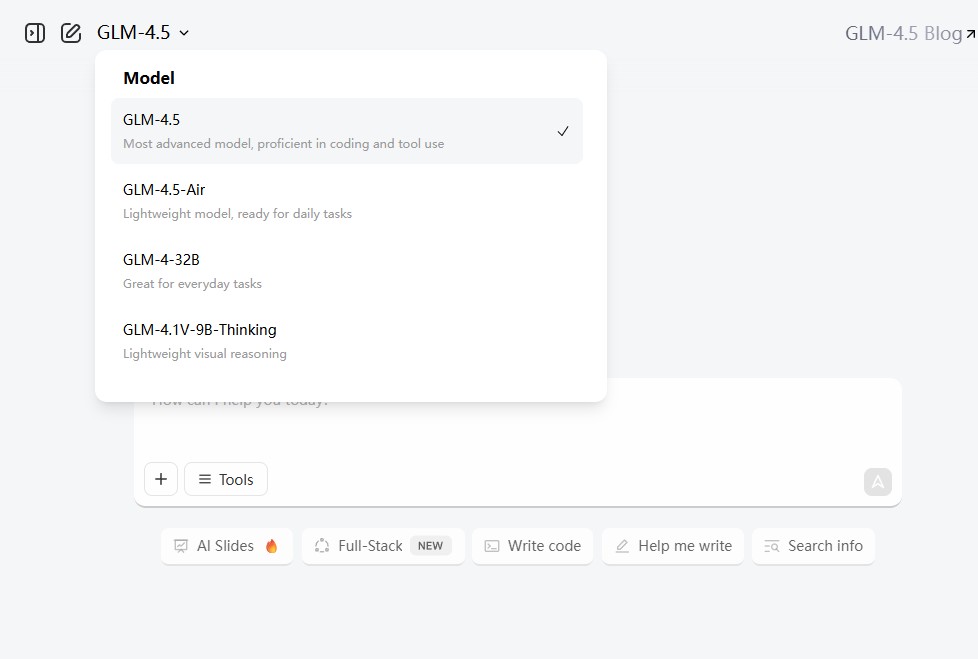

You can directly experience qwen3-coder and glm-4.5 online via their official chat websites:

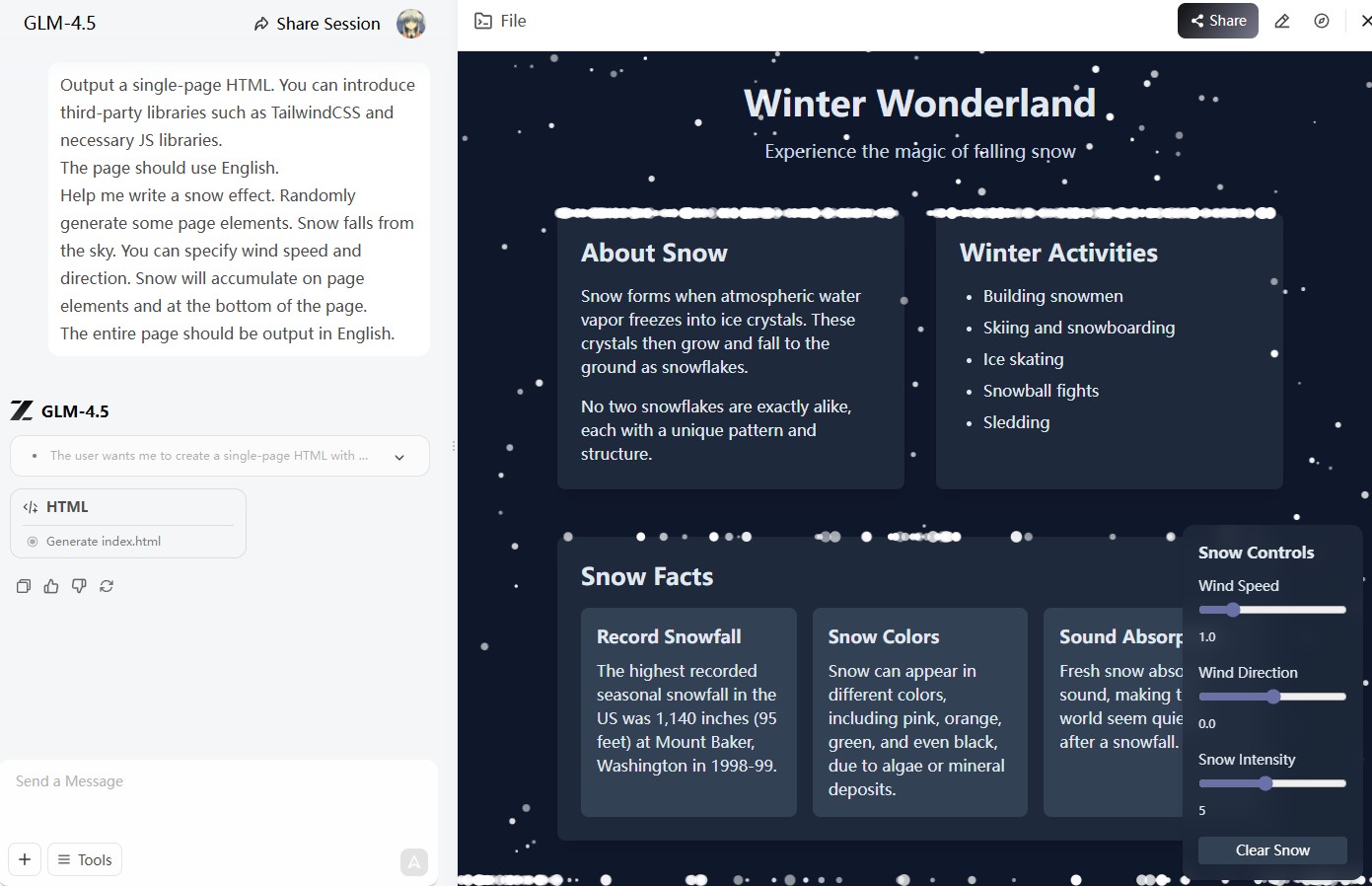

Both platforms provide single-page HTML previews where you can directly view generated pages.

For example, I asked the AI to create a snowy page, which can be previewed directly on the right side:

https://chat.z.ai/space/r0pej663mqk1-art

These are suitable for quick use and small project generation but are not recommended for actual coding as copy-pasting is cumbersome, there is no IDE integration, and repeated edits may lead the AI to omit existing code causing errors.

ModelScope Platform’s Daily 2000 Free Quotas

1. Obtaining Quotas and Registration Process

You might have seen experience-sharing posts on Zhihu or developer communities about “multi-accounting to farm ModelScope freebies.” The typical process is:

- Register at ModelScope (ModelScope community): modelscope.cn

- Go to [Model Services], select models like Qwen3-Coder and GLM-4.5, and apply for API access.

- Users receive a daily quota of 2000 free calls (quota type, validity, and available models are periodically adjusted; see official docs for details).

This quota is enough for a full day’s use. Single calls to Qwen3-Coder or GLM-4.5 usually involve input prompts of a hundred tokens and output of over a thousand tokens, sufficient for main development and coding experiments for personal small projects.

ModelScope's API is compatible with OpenAI and Anthropic (Beta testing), so it supports most AI coding plugins (like cline, roocode), and can also be used with Claude Code.

Friendly reminder: This is different from Alibaba’s Bailian platform, which charges fees.

Official VSCode Plugin Integration (The Easiest Way)

Note that free versions might use your data for training, so protect your third-party service keys securely by putting keys into environment variables or separate config files (configure plugins to ignore these) and avoid writing keys directly in code to prevent uploads.

Tongyi Lingma (Qwen3-Coder Official IDE Plugin)

- Go to the VSCode extension marketplace, search for and install “Tongyi Lingma” or “TONGYI Lingma”

- Log in with your Alibaba Cloud account, auto-connects to Qwen3-Coder without manual API key configuration

- Provides local completion, whole section/file optimization, code explanation, one-click unit test generation, with experience comparable to Github Copilot

- No call number or fee limits, ideal for individuals and small teams

Remember to disable its memory feature in settings — the more it remembers, the more hallucinations it may generate. It also supports JetBrains and other IDEs.

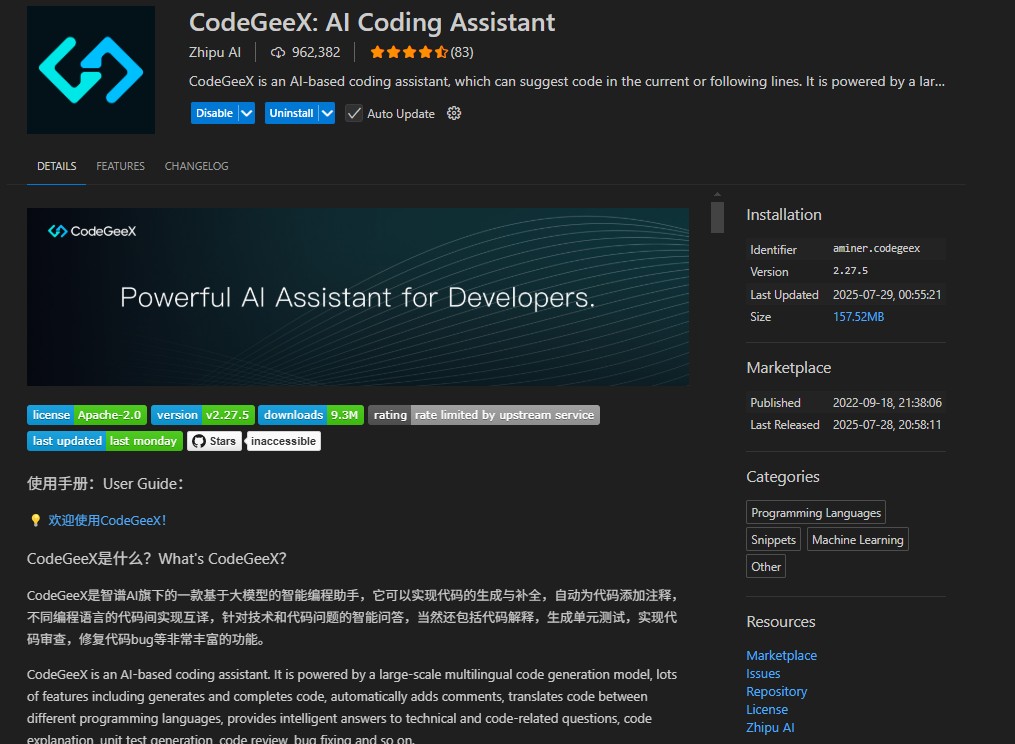

CodeGeeX (GLM-4.5 Official IDE Plugin by Zhipu AI)

- Search and install “CodeGeeX” from VSCode extensions

- Log in with a Zhipu AI account to start using GLM-4.5

Vibe Coding Practical Tips

Finally, a few practice tips for Vibe Coding. The essence of Vibe Coding lies in “conversational development.” Here are some tips to help you get the hang of it faster:

-

Start with the Spec, not the code: Don’t say “write me a login page” directly. Instead, clarify requirements with AI first: “Let’s design a login page with username and password inputs and a login button. Clicking the button should call the

/api/loginendpoint. Handle loading and error states.” This greatly improves code quality. -

Generate in steps, take small quick steps: Let the AI generate the HTML structure first, then CSS styles, and finally JavaScript logic. Confirm and tweak each step like pair programming with a junior coder. Use AI-favored UI libraries like tailwindcss, shadcn/ui for more standardized components and significant code reduction, especially with CSS; generating everything at once would overload context.

-

Make good use of “continue” and “optimize”: When AI outputs incomplete or flawed code, respond directly with “This code has some issues, please fix...” or “Can this function be optimized for performance?” Treat it as your coding partner.

-

Let AI help with documentation and tests: After code is done, say things like “Okay, now generate JSDoc comments for this function” or “Write a unit test case for this component.” Offload tedious work as well.

Through this approach, you are no longer just a “code user” but the “conductor” of the development process, which is the charm of Vibe Coding.

Conclusion and Recommendations

- Individual developers/small teams: ModelScope’s free quota + Tongyi Lingma/CodeGeeX plugins can meet almost all daily AI coding needs, enabling zero-cost development.

- Seeking ultimate automation: For batch tasks or CI/CD integration, using API integration combined with streaming output and caching strategies can build customized automated workflows.

- Scenario choice: Qwen3-Coder shines in Agentic Coding; GLM-4.5 is stronger in full-stack abilities and overall reasoning. Both are top-tier free code assistants.

- Vibe Coding core: The key to mastering Vibe Coding is Spec first. Communicate requirements with AI, clarify details, guide step-by-step code generation and optimization, ultimately achieving efficiency and quality.

Common FAQ

1. What’s the difference between Qwen3-Coder and GLM-4.5, and how should I choose?

Answer: Both are top-tier large code models but with different focuses.

- Qwen3-Coder: More focused on Agentic Coding, acting like an intelligent agent to handle complex multi-step coding tasks. Suitable for deep integration and automation.

- GLM-4.5: A more versatile model, strong in code ability and balanced in general reasoning and full-stack tech support. If you want a “Swiss army knife” that can both code and handle various tech Q&A, GLM-4.5 is a good choice.

Overall, it’s recommended to try both and decide based on your specific projects and personal preference.

2. What if the large model outputs buggy code? Will it mislead coding?

Answer: AI is always an auxiliary tool; developers still need final decisions and reviews. Best practice is a “Spec-first” approach: clearly specify requirements, let AI handle tedious coding, and let developers validate, test, and integrate. This human-AI collaboration can effectively avoid “AI confidently spouting nonsense.”