DeepSeek V3.1 Release Overview: Performance, Pricing, and Feature Highlights

A brief summary of the main performance, pricing, and feature highlights of the DeepSeek V3.1 release, along with comparisons to mainstream large models.

Overview

DeepSeek V3.1 is the latest flagship open-source large model quietly released by Chinese AI startup DeepSeek in August 2025. With 685 billion parameters, a Mixture of Experts (MoE) architecture, and a highly open MIT license, it quickly attracted global developer and industry attention. V3.1 focuses on efficient inference, strong coding and mathematical abilities, and extremely low commercial usage costs, becoming one of the benchmarks in the open-source AI field and directly competing with proprietary mainstream models such as GPT-5 and Claude 4.1.

Key Points

1. Technology and Architecture

- Model Size and Architecture:

- 685 billion parameters (the model card lists 685B, but details say 671B; MoE mixture of experts architecture activates only 3.7 billion parameters per inference), significantly saving inference and training compute resources.

- Supports 128K ultra-long context to meet the needs of long documents, multi-turn conversations, and code analysis.

- Offers BF16, FP8, F32, and other multi-format weight optimizations to adapt to diverse hardware.

- Supports standard chat mode as well as a special "thinking/tool call" token hybrid inference mode, unifying V3 and R1. Going forward, the official API will no longer provide separate V3 and R1; DeepSeek Chat will become V3.1 with thinking mode disabled, while Reasoner will become V3.1 with thinking mode enabled.

2. Performance

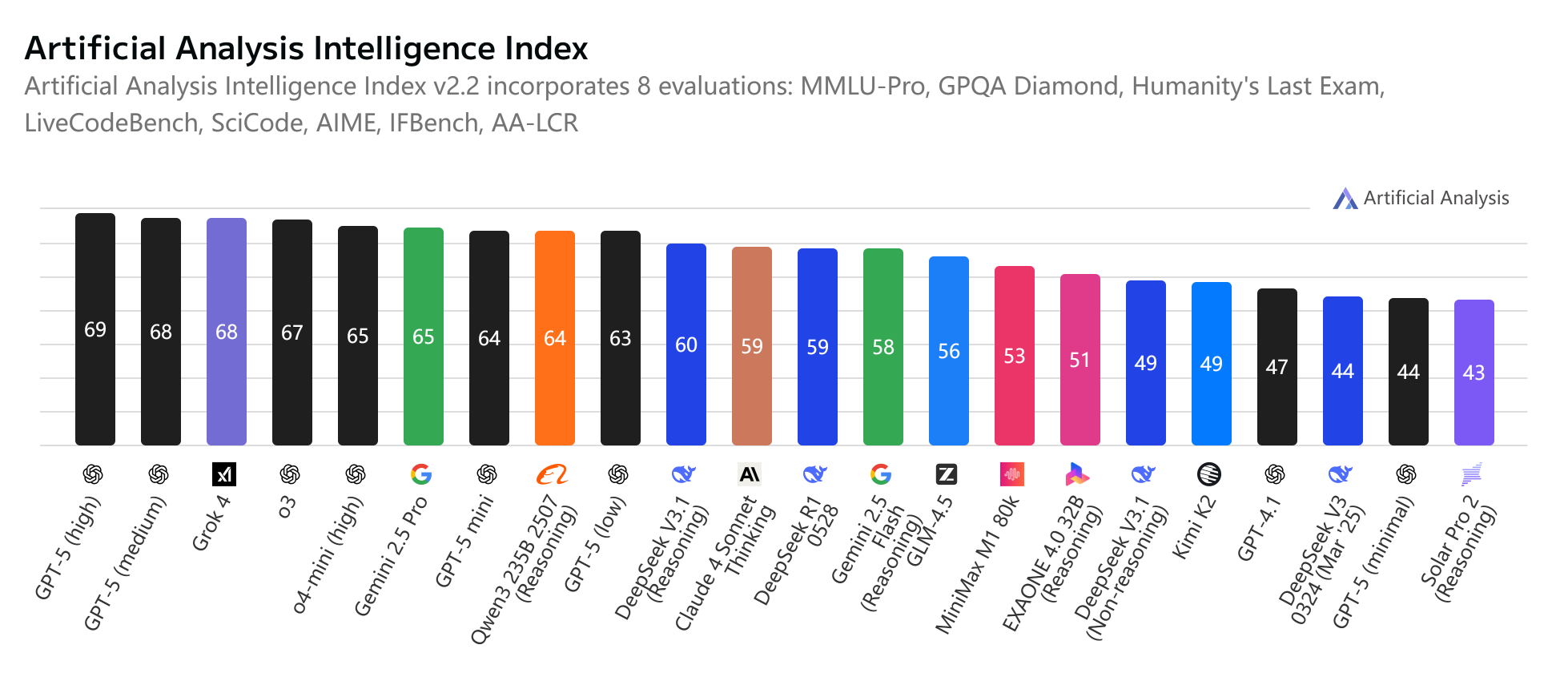

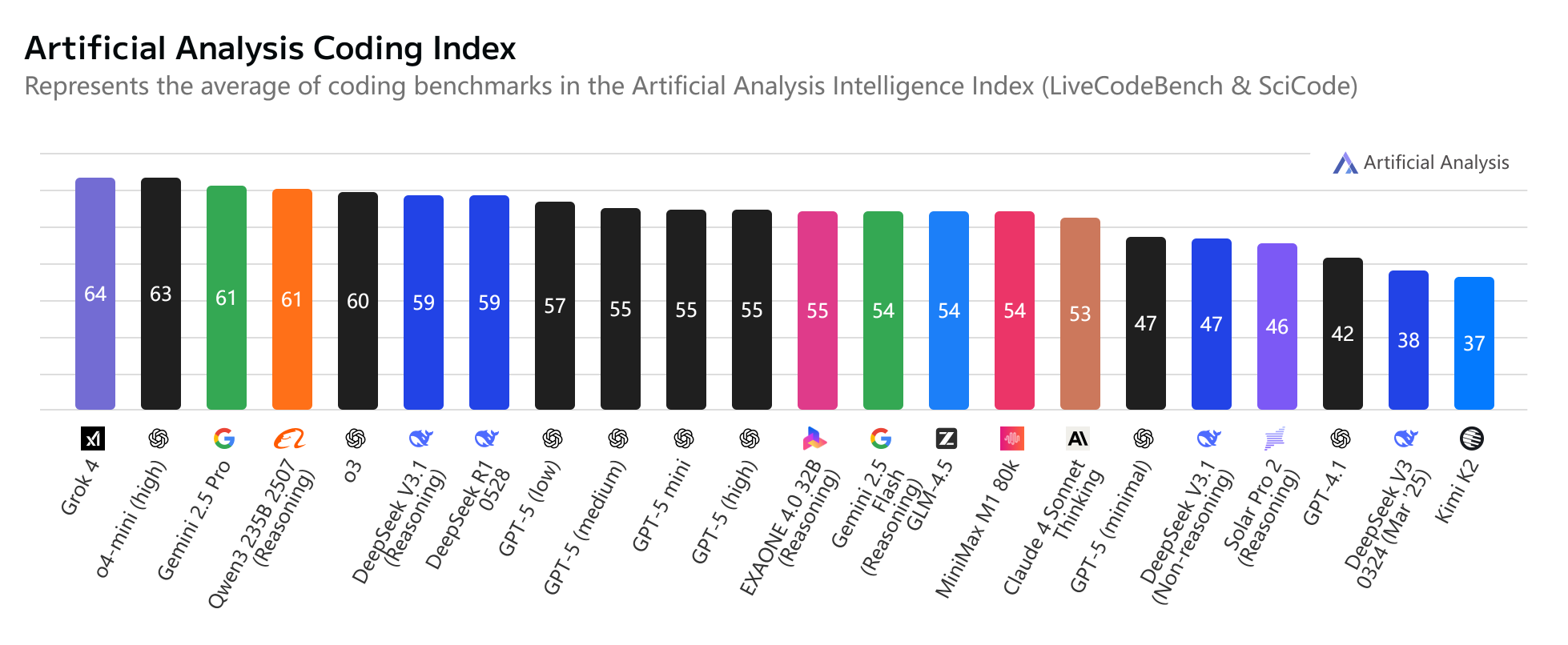

Images sourced from: Artificial Analysis

Compared to previous v3 and r1 versions, DeepSeek v3.1 without thinking mode shows many improvements over v3, but with thinking mode enabled, it is overall comparable with R1.

- Outstanding Coding and Math Abilities:

- Aider programming benchmark pass rate is 71.6%, surpassing Claude Opus 4 and approaching or partially exceeding GPT-5, with excellent performance in code generation, debugging, and refactoring. (Reference: Deepseek v3.1 scores 71.6% on aider – non-reasoning sota)

- dirname: 2025-08-19-17-08-33--deepseek-v3.1

test_cases: 225

model: deepseek/deepseek-chat

edit_format: diff

commit_hash: 32faf82

pass_rate_1: 41.3

pass_rate_2: 71.6

pass_num_1: 93

pass_num_2: 161

percent_cases_well_formed: 95.6

error_outputs: 13

num_malformed_responses: 11

num_with_malformed_responses: 10

user_asks: 63

lazy_comments: 0

syntax_errors: 0

indentation_errors: 0

exhausted_context_windows: 1

prompt_tokens: 2239930

completion_tokens: 551692

test_timeouts: 8

total_tests: 225

command: aider --model deepseek/deepseek-chat

date: 2025-08-19

versions: 0.86.2.dev

seconds_per_case: 134.0

total_cost: 1.0112

costs: $0.0045/test-case, $1.01 total, $1.01 projected

- Strong mathematical reasoning and complex logic problem-solving ability (excellent performance on large public benchmarks such as AIME and MATH-500).

- Text comprehension and knowledge Q&A benchmarks (e.g., MMLU) are close to top models like GPT-4 and Claude 3.

- Improved Reasoning and General Capabilities:

- Chatting, coding, and reasoning merged into one, eliminating the complexity of switching models and improving development and deployment efficiency.

3. Pricing and Openness

- Extremely Low Usage Cost:

- API call pricing approximately $0.56 per million input tokens and $1.68 per million output tokens (starting September 2025), more than 90% cheaper than proprietary models for the same workload.

- Typical programming task cost about $1 (compared to Claude Opus 4 at $68, GPT-4/5 about $56–$70), greatly reducing AI expenses for companies and developers.

- MIT Open Source License:

- Free for commercial use, allows secondary development, customization, and redistribution, greatly facilitating innovation and industrial application.

- Model weights can be downloaded directly from platforms like Hugging Face and also accessed via API on demand, though local deployment requires very high compute resources (about 700GB storage).

4. Features and Use Cases

- Supports long document processing, enterprise-scale batch code generation/review, educational programming assistant, scientific data analysis, and many other scenarios.

- Native tool calls, search injection, and code agents provide rich support for R&D and automation.

- Flexible integration with multiple development environments including VSCode, JetBrains IDEs, Aider CLI tools, and various CI/CD workflows.

- The official DeepSeek website already supports anthropic format APIs, enabling direct use of DeepSeek with Claude Code.

5. Competitor Comparison and Value Positioning

-

Compared with GPT-5/Claude 4.1:

- Performance is close to or slightly better than the Claude 4 series; reasoning capability and openness surpass the proprietary GPT-5 API.

- Pricing is a clear advantage, suitable for large-scale calls and innovative development; proprietary models still have advantages in ecosystem, security, compliance, and enterprise-level deep applications.

- DeepSeek-V3.1 is more suitable for budget-sensitive developers, startups, and research institutions seeking customization and extreme cost-effectiveness.

-

Drawbacks and Limitations:

- The model is huge, local private deployment requires very high compute resources, which is not very friendly for individual users.

- Adoption in Western markets faces realistic barriers due to security reviews and geopolitical factors.

- Certain ultra-complex reasoning and fine-grained multimodal tasks are still slightly behind top proprietary models.

Conclusion

DeepSeek V3.1, with excellent cost-performance, strong general and professional capabilities, and an open and inclusive licensing strategy, has become a leading product in the current open-source large model arena. It is especially appealing to teams with high-frequency AI usage that seek cost control and innovation, with potential to promote further global AI accessibility. Enterprises and developers can weigh ecological availability and model performance according to their needs and choose the best path combining public API or hybrid deployment.